We want to find a orthogonal set of vectors (), which when transformed by our matrix (), remain orthogonal (): (Transforming orthogonal matrix with produces orthogonal matrix scaled by (which is diagonal - only scales))

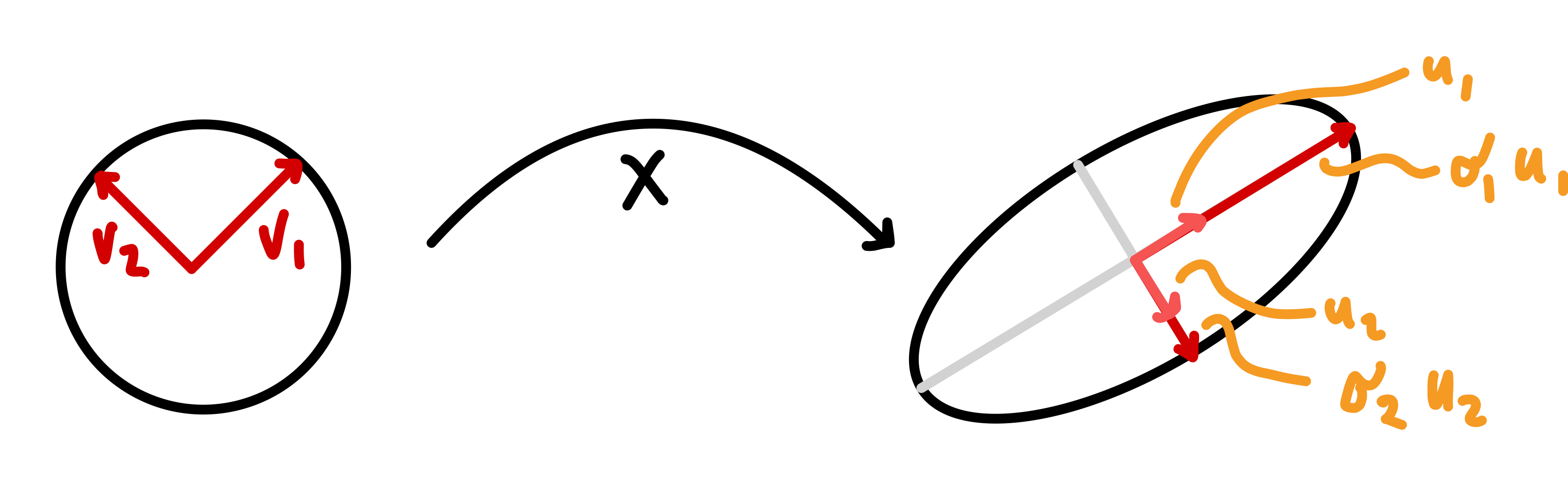

We can consider a 2D case of a sphere (circle) which is transformed by matrix (which will stretch and rotate the vector):

The n-dimensional sphere has orthogonal vectors () which is transformed to orthonormal unit vectors and stretch amounts (with being the matrix rank, typically )

The n-dimensional sphere has orthogonal vectors () which is transformed to orthonormal unit vectors and stretch amounts (with being the matrix rank, typically )

- are the principle axes

- are called the singular values

This leads to the following mathematical expression:

This is similar to the eigenvalue problem , however, it does not require the same vector on both sides.

We can write this in matrix form:

Terminology: Orthogonal matrix is a matrix such that

In other words, any real matrix can be decomposed as:

-

- is a orthonormal matrix (this is not the same as the optimised transformation matrix used in PCA)

- V is a orthogonal matrix

- is a diagonal matrix with non-negative entries in non-decreasing order down the diagonal ()

This is called the SVD of

The non-zero entries of are called the singular values of , also the number of non-zero entries in is

Solving these matrixes

is an eigenvalue problem (), with , , and , thus a eigendecomposition is used to compute

Likewise for :

Another problem you can solve with eigendecomposition

Columns of and (not ) are called the left and right singular vectors of and are associated with a singular value in the same column of

When (much more samples than input dimensions), we may trim and of may zero column to get the thin SVD

- is now (as opposed to ) (square diagonal matrix without zeros padding)

- is now (as opposed to ) Furthermore, if , we get the compact SVD which trims more zeros from the matrices (since only has non zero rows)

- is now

- is now

- is now

You can also solve these matrices in python

u, s, vt = numpy.linalg.svd(X)

# s is a 1D-array of singular values,

# and needs to be converted to a diagonal for Sigma

Sigma = np.diag(s)